Full details are provided in our paper:

Look, Listen and Learn More: Design Choices for Deep Audio Embeddings

J. Cramer, H.-H. Wu, J. Salamon, and J. P. Bello.

IEEE Int. Conf. on Acoustics, Speech and Signal Proc. (ICASSP), pp 3852-3856, Brighton, UK, May 2019.

[IEEE][PDF][BibTeX][Copyright]

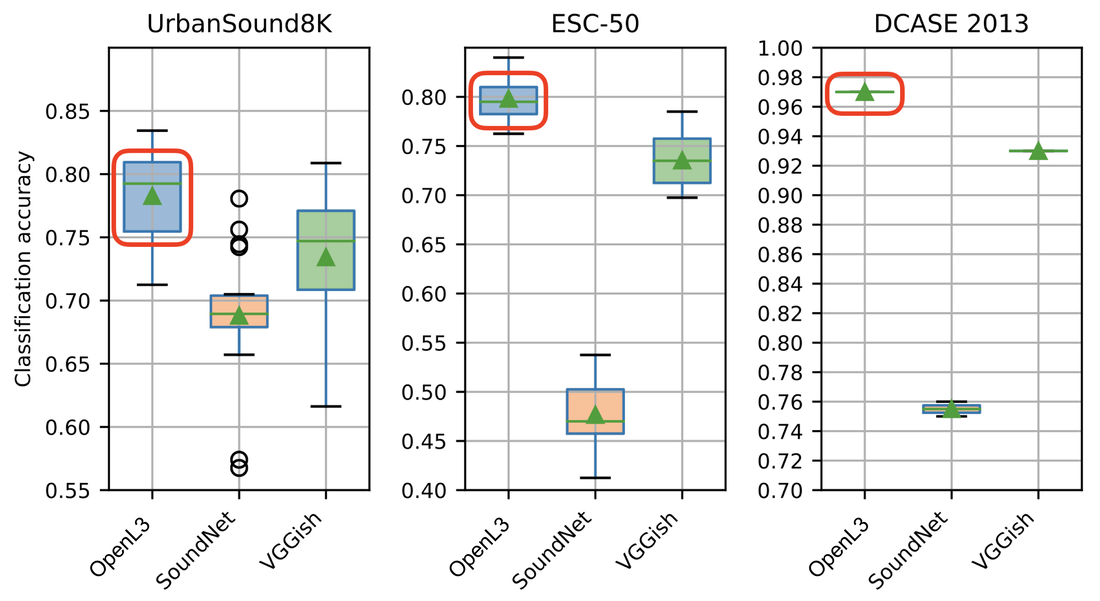

How well does it work?

Using OpenL3

$ pip install openl3

Once installed, using OpenL3 in python can be done like this (simplest use case without setting custom parameter values):

import openl3

import soundfile as sf

audio, sr = sf.read('/path/to/file.wav')

embedding, timestamps = openl3.get_embedding(audio, sr)

We also provide a command-line interface (CLI) that can be launched by calling "openl3" from the command line:

$ openl3 /path/to/file.wav

The API (both python and CLI) includes more options such as changing the hop size used to extract the embedding, the output dimensionality of the embedding and several other parameters. A good place to start is the OpenL3 tutorial.

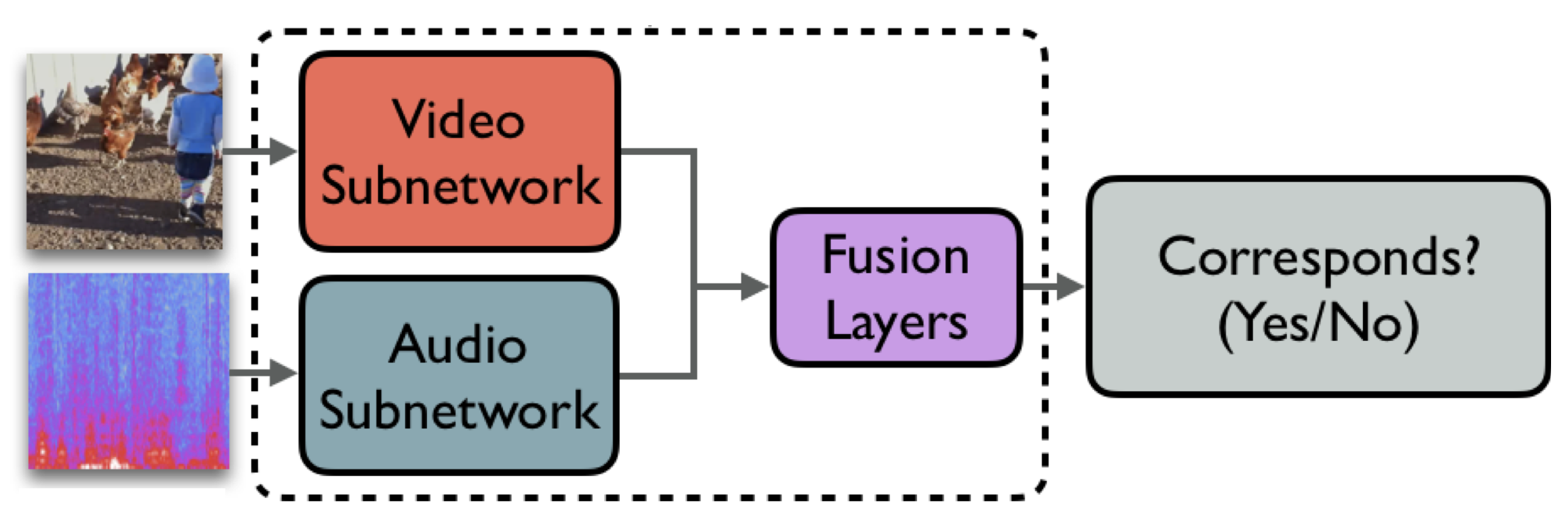

How was OpenL3 trained?

We look forward to seeing what the community does with OpenL3!

...and, if you're attending ICASSP 2019, be sure to stop by our poster on Friday, May 17 between 13:30-15:30 (session MLSP-P17: Deep Learning V, Poster Area G, paper 2149)!

RSS Feed

RSS Feed