Look mum, I'm on TV! :)

|

Fox 5 New's Jessica Formoso interviewed me for their feature article about our SONYC project:

The full article is available here: http://www.fox5ny.com/news/new-york-city-noise-pollution-research

Look mum, I'm on TV! :)

0 Comments

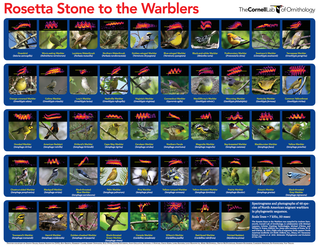

CLO-43SD is targeted at the closed-set N-class problem (identify which of of these 43 known species produced the flight call in this clip), while CLO-WTSP and CLO-SWTH are targeted at the binary open-set problem (given a clip determine whether it contains a flight call from the target species or not). The latter two come pre-sorted into two subsets: Fall 2014 and Spring 2015. In our study we used the fall subset for training and the spring subset for testing, simulating adversarial yet realistic conditions that require a high level of model generalization. For further details about the datasets see our article: Towards the Automatic Classification of Avian Flight Calls for Bioacoustic Monitoring J. Salamon , J. P. Bello, A. Farnsworth, M. Robbins, S. Keen, H. Klinck and S. Kelling PLOS ONE 11(11): e0166866, 2016. doi: 10.1371/journal.pone.0166866. [PLOS ONE][PDF][BibTeX] You can download all 3 datasets from the Dryad Digital Repository at this link.  A white-throated sparrow, one of the species targeted in the study. Image by Simon Pierre Barrette, license CC-BY-SA 3.0. A white-throated sparrow, one of the species targeted in the study. Image by Simon Pierre Barrette, license CC-BY-SA 3.0.

Automatic classification of animal vocalizations has great potential to enhance the monitoring of species movements and behaviors. This is particularly true for monitoring nocturnal bird migration, where automated classification of migrants’ flight calls could yield new biological insights and conservation applications for birds that vocalize during migration. In this paper we investigate the automatic classification of bird species from flight calls, and in particular the relationship between two different problem formulations commonly found in the literature: classifying a short clip containing one of a fixed set of known species (N-class problem) and the continuous monitoring problem, the latter of which is relevant to migration monitoring. We implemented a state-of-the-art audio classification model based on unsupervised feature learning and evaluated it on three novel datasets, one for studying the N-class problem including over 5000 flight calls from 43 different species, and two realistic datasets for studying the monitoring scenario comprising hundreds of thousands of audio clips that were compiled by means of remote acoustic sensors deployed in the field during two migration seasons. We show that the model achieves high accuracy when classifying a clip to one of N known species, even for a large number of species. In contrast, the model does not perform as well in the continuous monitoring case. Through a detailed error analysis (that included full expert review of false positives and negatives) we show the model is confounded by varying background noise conditions and previously unseen vocalizations. We also show that the model needs to be parameterized and benchmarked differently for the continuous monitoring scenario. Finally, we show that despite the reduced performance, given the right conditions the model can still characterize the migration pattern of a specific species. The paper concludes with directions for future research.

The full article is available freely (open access) on PLOS ONE: Towards the Automatic Classification of Avian Flight Calls for Bioacoustic Monitoring J. Salamon , J. P. Bello, A. Farnsworth, M. Robbins, S. Keen, H. Klinck and S. Kelling PLOS ONE 11(11): e0166866, 2016. doi: 10.1371/journal.pone.0166866. [PLOS ONE][PDF][BibTeX] Along with this study, we have also published the three new datasets for bioacoustic machine learning that were compiled for this study.  Today SONYC was featured on several major news outlets including the New York Times, NPR and Wired! This follows NYU's press release about the official launch of the SONYC project. Needless to say I'm thrilled about the coverage the project's launch is receiving. Hopefully it is a sign of the great things yet to come from this project, though, I should note, it has already resulted in several scientific publications. Here's the complete list of media articles (that I could find) covering SONYC. The WNYC radio segment includes a few words from yours truly :) BBC World Service - World Update (first minute, then from 36:21) Sounds of New York City (German Public Radio) If you're interested to learn more about the SONYC project have a look at the SONYC website. You can also check out the SONYC intro video: I'm extremely excited to report that our Sounds of New York City (SONYC) project has been granted a Frontier award from the National Science Foundation (NSF) as part of its initiative to advance research in cyber-physical systems as detailed in the NSF’s press release. NYU has issued a press release providing further information about the SONYC project and the award. From the NYU press release: The project – which involves large-scale noise monitoring – leverages the latest in machine learning technology, big data analysis, and citizen science reporting to more effectively monitor, analyze, and mitigate urban noise pollution. Known as Sounds of New York City (SONYC), this multi-year project has received a $4.6 million grant from the National Science Foundation and has the support of City health and environmental agencies. Further information about the project project can be found on the SONYC website. You can also check out the SONYC intro video: |

NEWSMachine listening research, code, data & hacks! Archives

March 2023

Categories

All

|

RSS Feed

RSS Feed