To learn more please read out paper:

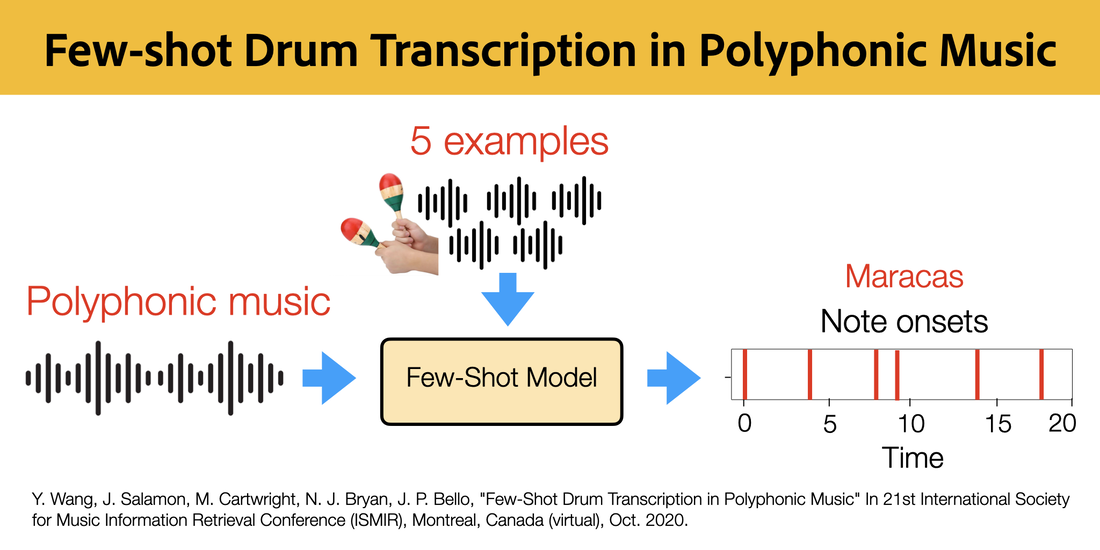

Few-Shot Drum Transcription in Polyphonic Music

Y. Wang, J. Salamon, M. Cartwright, N. J. Bryan, J. P. Bello

In 21st International Society for Music Information Retrieval Conference (ISMIR), Montreal, Canada (virtual), Oct. 2020.

You can find more related materials including a short video presentation and a poster here:

https://program.ismir2020.net/poster_1-14.html

RSS Feed

RSS Feed