A list of code/software/data projects I'm currently or have been involved in:

Software

|

MELODIA

Melodia automatically estimates the pitch of a song's main melody. It implements the melody extraction algorithm I developed as part of my PhD which automatically estimates the fundamental frequency corresponding to the pitch of the predominant melodic line of a piece of polyphonic music. Details about the algorithm are provided in this paper. Melodia is available as vamp plugin which can be used with Sonic Visualiser, Sonic Annotator, or directly in Python. |

|

CREPE

CREPE is a monophonic pitch tracker based on a deep convolutional neural network operating directly on the time-domain waveform input. CREPE is state-of-the-art (as of 2018), outperforming popular pitch trackers such as pYIN and SWIPE. This repository makes it easy to run CREPE as a simple command line python script. For further details about the algorithm and its performance please see our paper. |

|

SCAPER

Scaper is a library for soundscape synthesis and augmentation. Using scaper one can automatically synthesize soundscapes with corresponding ground truth annotations. It is useful for running controlled ML experiments (ASR, sound event detection, bioacoustic species recognition, etc.) and experiments to assess human annotation performance. It's also potentially useful for generating data for source separation experiments and for generating ambisonic soundscapes. More details in this paper. |

|

mir_eval

Python library for computing common heuristic accuracy scores for various music/audio information retrieval/signal processing tasks. In other words, DIY MIR evaluation! More details in this paper. |

|

JAMS

A JSON Annotated Music Specification for Reproducible MIR Research. Or in other words, a structured format for storing any kind of music annotation commonly used in MIR research that's easy to parse, validate, manipulate, and evaluate (using mir_eval). For details about the original JAMS specification see this paper, for a more current description of the (much evolved) specification see this technical report. |

|

ESSENTIA

Essentia is an open-source C++ library for audio analysis and audio-based music information retrieval. It contains an extensive collection of reusable algorithms which implement audio input/output functionality, standard digital signal processing blocks, statistical characterization of data, and a large set of spectral, temporal, tonal and high-level music descriptors. More details in this paper. |

|

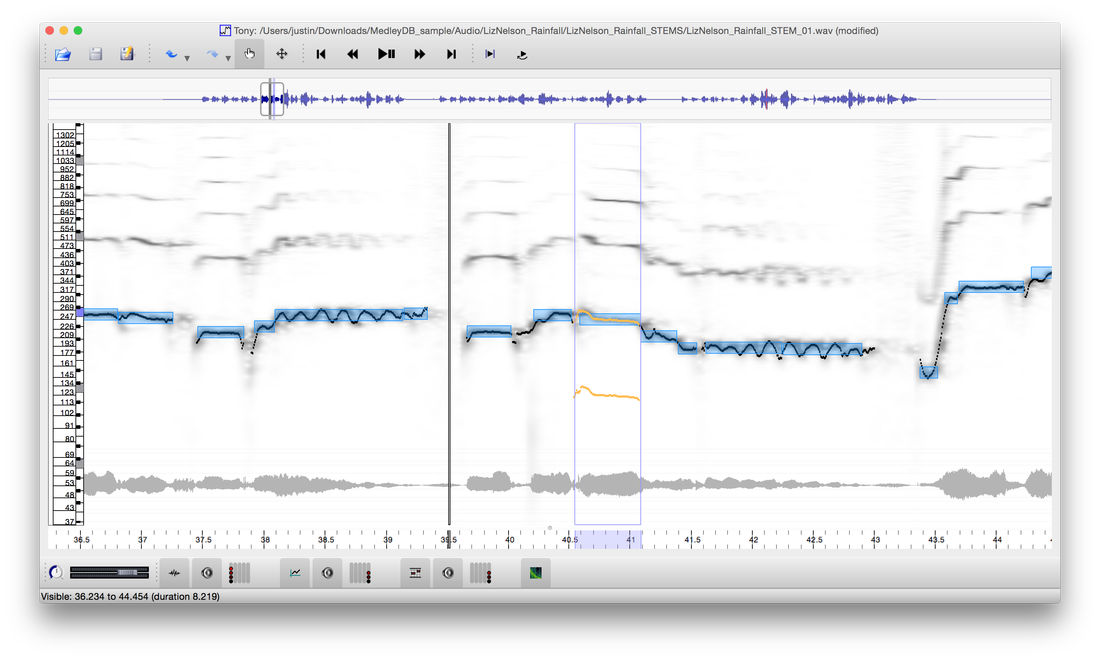

Tony

Tony is a software program for high quality scientific pitch and note transcription in three steps: automatic visualisation/sonification, easy correction, and export. |

|

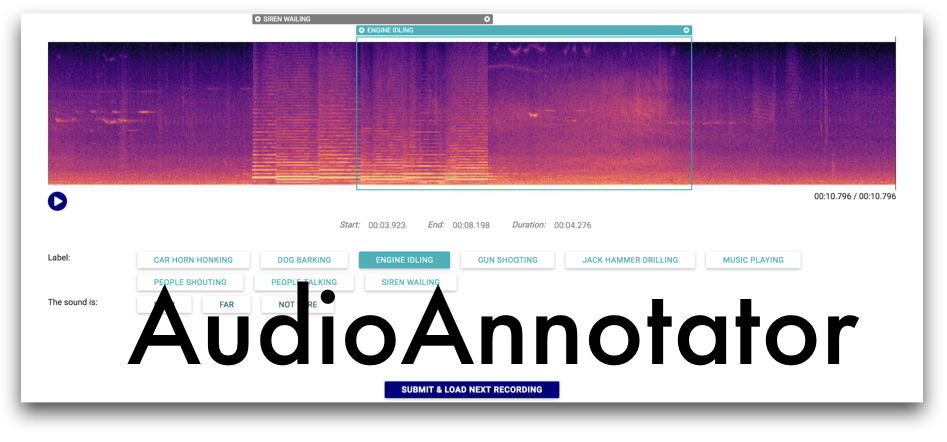

audio-annotator

audio-annotator is a web interface that allows users to annotate audio recordings. It has 3 types of audio visualizations: invisible, waveform and spectrogram. It is useful for crowdsourcing audio labels, running controlled experiments on crowdsourcing audio labels, and supports feedback mechanisms for providing real-time feedback to the user based on their annotations. |

|

MIR.EDU

MIR.EDU is an open source vamp plug-in library written in C++ which implements a basic set of descriptors useful for teaching MIR. The idea is to provide a simple library with clear and well documented code for learning about audio descriptors (RMS, log attack-time, spectral flux, etc.). For details see this extended abstract. |

|

MELOSYNTH

MeloSynth is a python script to synthesize melodies represented as a sequence of pitch (frequency) values. It was written to synthesize the output of the Melodia Melody Extraction Vamp Plugin, but can be used to synthesize any pitch sequence represented as a two-column txt or csv file where the first column contains timestamps and the second contains the corresponding frequency values in Hertz (blog post). |

Data

URBAN SOUND & ENVIRONMENTAL SOUND

|

UrbanSound8K & UrbanSound

Two datasets, UrbanSound and UrbanSound8K, containing labeled sound recordings from 10 urban sound classes: air_conditioner, car_horn, children_playing, dog_bark, drilling, enginge_idling, gun_shot, jackhammer, siren, and street_music. The classes are drawn from the urban sound taxonomy. For a detailed description of the datasets see this paper. These datasets were created as part of the SONYC project |

|

URBAN-SED

URBAN-SED is a dataset of 10,000 soundscapes with sound event annotations generated using scaper. The dataset includes 10,000 soundscapes, totals almost 30 hours and includes close to 50,000 annotated sound events. Every soundscape contains between 1-9 sound events from the following classes: air_conditioner, car_horn, children_playing, dog_bark, drilling, engine_idling, gun_shot, jackhammer, siren and street_music. Further details about URBAN-SED can be found in Section 3 of the scaper-paper. |

MUSIC INFORMATION RETRIEVAL

|

MedleyDB

MedleyDB is a dataset of annotated, royalty-free multitrack recordings. MedleyDB was curated primarily to support research on melody extraction, addressing important shortcomings of existing collections. For each song we provide melody f0 annotations as well as instrument activations for evaluating automatic instrument recognition. The dataset is also useful for research on tasks that require access to the individual tracks of a song such as source separation and automatic mixing. For further details see this paper. |

|

Synth Datasets: MDB-melody-synth, MDB-bass-synth, MDB-mf0-synth, MDB-stem-synth, Bach10-mf0-synth

Datasets for research on monophonic, melody, bass, and multiple f0 estimation (pitch tracking). Datasets include both music mixed music and monophonic instruments with perfect f0 (pitch) annotations obtained via the analysis/synthesis approach described in our paper: An analysis/synthesis framework for automatic f0 annotation of multitrack datasets. |

|

MTG-QBH

This dataset includes 118 recordings of sung melodies. The recordings were made as part of the experiments on Query-by-Humming (QBH) reported in this article. |

BIOACOUSTICS

|

Bioacoustics Datasets

I maintain this centralized list of bioacoustics datasets and repositories, which includes data from multiple sources (not just my own datasets) with the goal of being a one-stop-shop for bioacoustics data. |

|

Cornell-NYU Bioacoustics Datasets: CLO-43SD, CLO-WTSP & CLO-SWTH

Three datasets of nocturnal avian flight calls for research on bioacoustic species recognition: CLO-43SD contains 5,428 flight calls from 43 different species of North American wood-warblers for species classification. CLO-WTSP and CLO-SWTH contain a mix of clips with flight calls from a target species (White-throated sparrow or Swainson's thrush), clips flight calls from a variety of other (non-target) species, and clips with confounding factors that aren't flight calls. For further details see this paper. |