How does The Neumerator work?

Want to take The Neumerator to the next level? It's all on GitHub:

github.com/justinsalamon/neumerator

|

The Neumerator will take any audio file and generate a medieval neume-style manuscript from it! As you might have guessed this is a hack... more the specifically the hack I worked on together with Kristin Olson and Tejaswinee Kelkar during this month's Monthly Music Hackathon NYC (where I also gave a talk about melody extraction). How does The Neumerator work?You start by choosing a music recording, for example "Ave Generosa" by Hildegard Von Bingen (to keep things simple we'll work just with the first 20 seconds or so): The first step is to extract the pitch sequence of the melody, for which we use Melodia, the melody extraction algorithm I developed as part of my PhD. The result looks like this (pitch vs time): Once we have the continuos pitch sequence, we need to discretize it into notes. Whilst we could load the sequence into a tool such as Tony to perform clever note segmentation, for our hack we wanted to keep things simple (and fully automated), so we implemented our own very simple note segmentation process. First, we quantize the pitch curve into semitones: Then we smooth out very short glitches using a majority-vote sliding window: Then we can keep just the points where the pitch changes, and we're starting to get close to something that looks kinda of "neumey": Finally, we run it through the neumerator manuscript generator which applies our secret combination of magic, unicorns and gregorian chant, and voila! Being a hack and all, The Neumerator does have its limitations - currently we can only draw points (rather than connecting them into actual neumes), everything goes on a single four-line staff (regardless of the duration of the audio being processed), and of course the note quantization step is pretty basic. Oh, and the mapping of the estimated notes onto the actual pixel positions of the manuscript is a total hack. But hey, that's what future hackathons are for!

Want to take The Neumerator to the next level? It's all on GitHub: github.com/justinsalamon/neumerator

0 Comments

On Saturday June 20th I gave a talk about melody extraction at the Monthly Music Hackathon NYC hosted by Spotify's NYC office. The talk, titled "Melody Extraction: Algorithms and Applications in Music Informatics", provided a bird's eye view of my work on melody extraction, including the Melodia algorithm and a number of applications such as query-by-humming, cover song ID, genre classification, automatic transcription and tonic identification in Indian classical music (all of which are mention in my melody extraction page and in greater detail in my PhD thesis). In addition to my own talk, we had fantastic talks by NYU's Uri Nieto, Rachel Bittner, Eric Humphrey and Ethan Hein. A big thanks to Jonathan Marmor for organization such an awesome day!

Oh, we also had a lot of fun working on our hack later on in the day: The Neumerator!

But wait! We can load the pitch curve into Vocaloid and synthesize a pitch-accurate rendition with whichever voice we want, like this one:

Finally, we can mix our new rendition with the original accompaniment to produce our very own robot-remix! Here it is:

TADA!

Here's another example, this time with opera! Here's the original track:

And here's the Melodia+Vocaloid version:

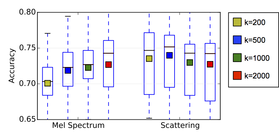

The opportunities for creating new songs (or just wreaking havoc with existing ones) are limitless!  In this paper we evaluate the scattering transform as an alternative signal representation to the mel-spectrogram in the context of unsupervised feature learning for urban sound classification. We show that we can obtain comparable (or better) performance using the scattering transform whilst reducing both the amount of training data required for feature learning and the size of the learned codebook by an order of magnitude. In both cases the improvement is attributed to the local phase invariance of the representation. We also observe improved classification of sources in the background of the auditory scene, a result that provides further support for the importance of temporal modulation in sound segregation. For further details please see our paper: J. Salamon and J. P. Bello. "Feature Learning with Deep Scattering for Urban Sound Analysis", 2015 European Signal Processing Conference (EUSIPCO), Nice, France, August 2015. [EURASIP][PDF][BibTex] |

NEWSMachine listening research, code, data & hacks! Archives

March 2023

Categories

All

|