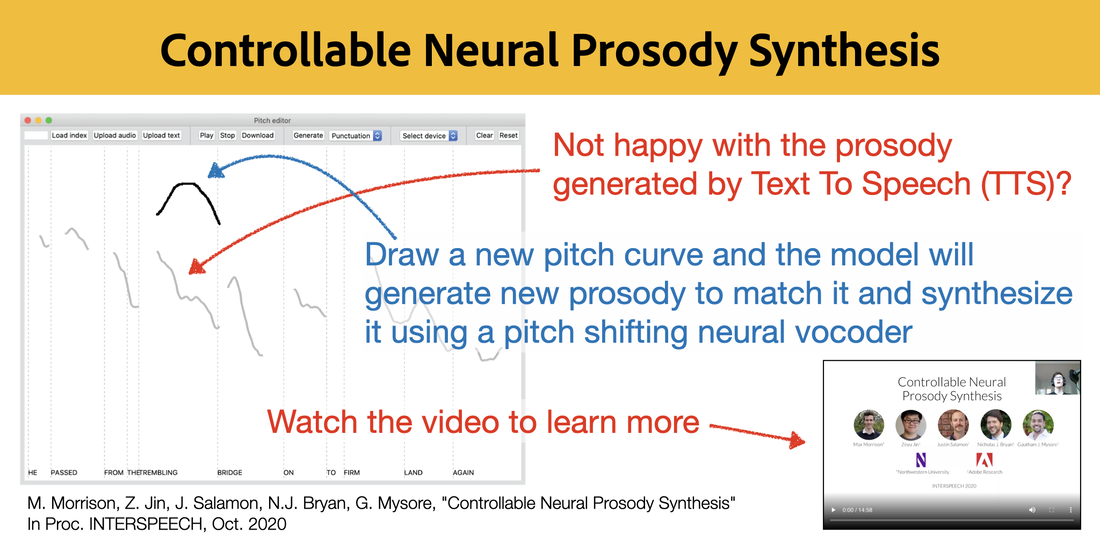

Here's Max's presentation of the work at INTERSPEECH 2020:

To hear more examples please visit Max's paper companion website.

For further details please read our paper:

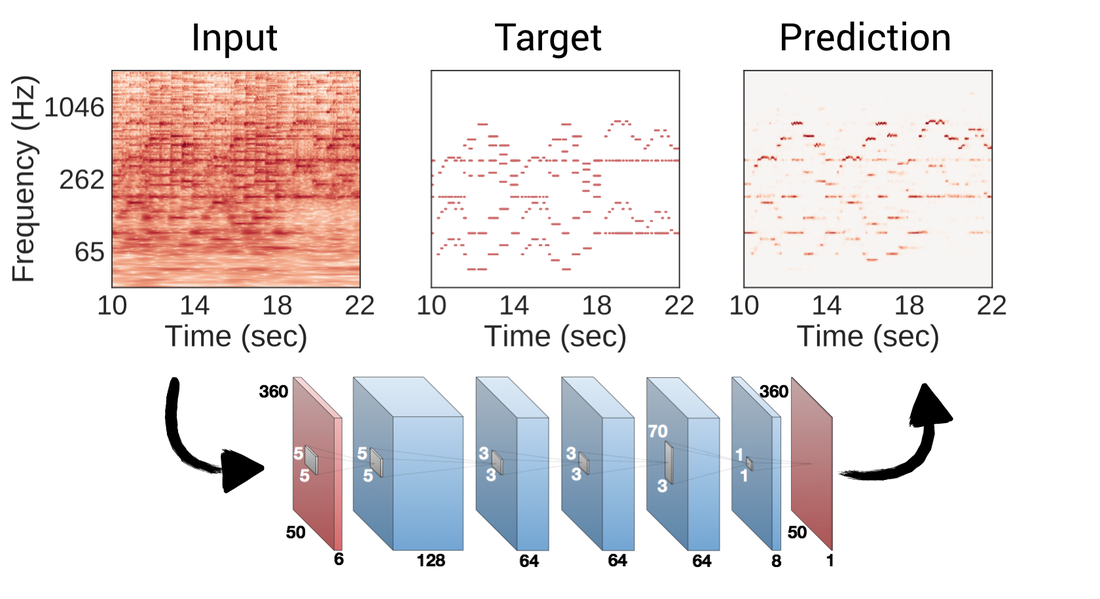

Controllable Neural Prosody Synthesis

M. Morrison, Z. Jin, J. Salamon, N.J. Bryan, G. Mysore

Proc. Interspeech. October 2020.

[INTERSPEECH][PDF][arXiv]

RSS Feed

RSS Feed