For further details see our paper:

Deep Convolutional Neural Networks and Data Augmentation For Environmental Sound Classification

J. Salamon and J. P. Bello

IEEE Signal Processing Letters, In Press, 2017.

[IEEE][PDF][BibTeX][Copyright]

Deep Convolutional Neural Networks and Data Augmentation For Environmental Sound Classification20/1/2017

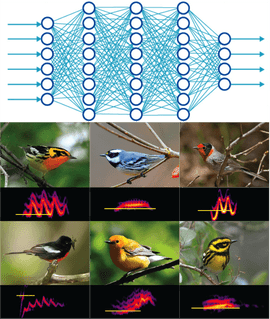

The ability of deep convolutional neural networks (CNN) to learn discriminative spectro-temporal patterns makes them well suited to environmental sound classification. However, the relative scarcity of labeled data has impeded the exploitation of this family of high-capacity models. This study has two primary contributions: first, we propose a deep convolutional neural network architecture for environmental sound classification. Second, we propose the use of audio data augmentation for overcoming the problem of data scarcity and explore the influence of different augmentations on the performance of the proposed CNN architecture. Combined with data augmentation, the proposed model produces state-of-the-art results for environmental sound classification. We show that the improved performance stems from the combination of a deep, high-capacity model and an augmented training set: this combination outperforms both the proposed CNN without augmentation and a “shallow” dictionary learning model with augmentation. Finally, we examine the influence of each augmentation on the model’s classification accuracy for each class, and observe that the accuracy for each class is influenced differently by each augmentation, suggesting that the performance of the model could be improved further by applying class-conditional data augmentation.

For further details see our paper: Deep Convolutional Neural Networks and Data Augmentation For Environmental Sound Classification J. Salamon and J. P. Bello IEEE Signal Processing Letters, In Press, 2017. [IEEE][PDF][BibTeX][Copyright]

0 Comments

Automated classification of organisms to species based on their vocalizations would contribute tremendously to abilities to monitor biodiversity, with a wide range of applications in the field of ecology. In particular, automated classification of migrating birds’ flight calls could yield new biological insights and conservation applications for birds that vocalize during migration. In this paper we explore state-of-the-art classification techniques for large-vocabulary bird species classification from flight calls. In particular, we contrast a “shallow learning” approach based on unsupervised dictionary learning with a deep convolutional neural network combined with data augmentation. We show that the two models perform comparably on a dataset of 5428 flight calls spanning 43 different species, with both significantly outperforming an MFCC baseline. Finally, we show that by combining the models using a simple late-fusion approach we can further improve the results, obtaining a state-of-the-art classification accuracy of 0.96.

Fusing Shallow and Deep Learning for Bioacoustic Bird Species Classification J. Salamon, J. P. Bello, A. Farnsworth and S. Kelling In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, USA, March 2017. [IEEE][PDF][BibTeX][Copyright] |

NEWSMachine listening research, code, data & hacks! Archives

March 2023

Categories

All

|