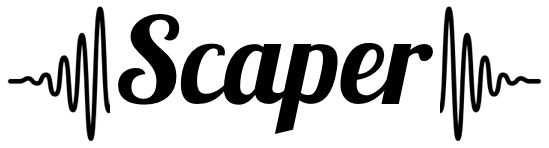

Scaper: a library for soundscape synthesis and augmentation

- Automatically synthesize soundscapes with corresponding ground truth annotations

- Useful for running controlled ML experiments (ASR, sound event detection, bioacoustic species recognition, etc.)

- Useful for running controlled experiments to assess human annotation performance

- Potentially useful for generating data for source separation experiments (might require some extra code)

- Potentially useful for generating ambisonic soundscapes (definitely requires some extra code)

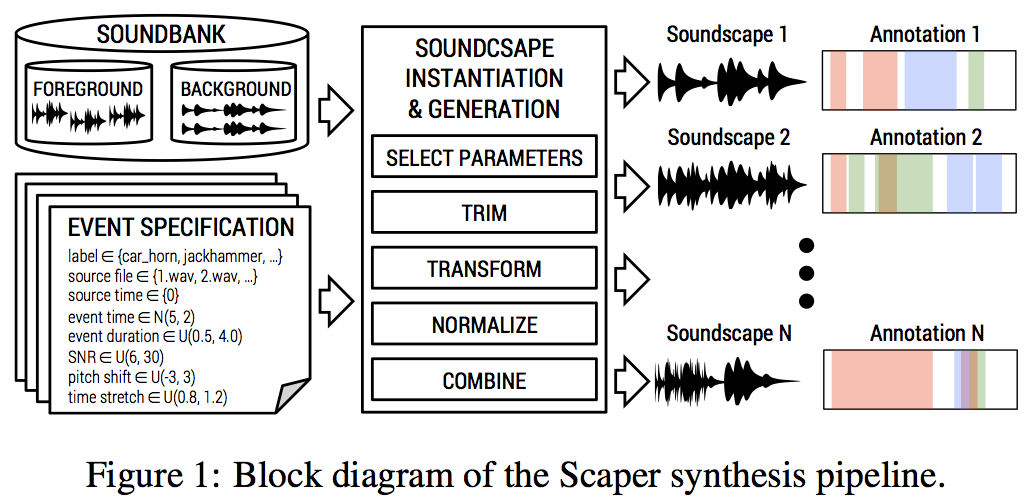

AudioAnnotator: a javascript web interface for annotating audio data

- Developed in collaboration with Edith Law and her students at the University of Waterloo's HCI Lab

- A web interface that allows users to annotate audio recordings

- Supports 3 types of visualization (waveform, spectrogram, invisible)

- Useful for crowdsourcing audio labels and running controlled experiments on crowdsourcing audio labels

- Supports feedback mechanisms for providing real-time feedback to the user based on their annotations

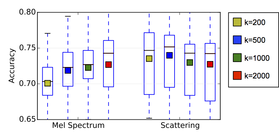

URBAN-SED dataset: a new dataset for sound event detection

- Includes 10,000 soundscapes with strongly labeled sound events generated using scaper

- Totals almost 30 hours and includes close to 50,000 annotated sound events

- Baseline convnet results on URBAN-SED are included in the scaper-paper.

Further information about scaper, the AudioAnnotator and the URBAN-SED dataset, including controlled experiments on the quality of crowdsourced human annotations as a function of visualization and soundscape complexity, are provided in the following papers:

Seeing sound: Investigating the effects of visualizations and complexity on crowdsourced audio annotations

M. Cartwright, A. Seals, J. Salamon, A. Williams, S. Mikloska, D. MacConnell, E. Law, J. Bello, and O. Nov.

Proceedings of the ACM on Human-Computer Interaction, 1(2), 2017.

Scaper: A Library for Soundscape Synthesis and Augmentation

J. Salamon, D. MacConnell, M. Cartwright, P. Li, and J. P. Bello.

In IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, Oct. 2017.

We hope you find these tools useful and look forward to receiving your feedback (and pull requests!).

Cheers, on behalf of the entire team,

Justin Salamon & Mark Cartwright.

​--

RSS Feed

RSS Feed