In the segment we discuss our latest work on automatic bird species classification from flight call recordings captured with acoustic sensors for bioacoustics migration monitoring.

You can listen to the BirdVox segment here.

|

On Friday June 24th the popular Science Friday radio show featured a segment about our BirdVox project. The segment included sound bites from fellow BirdVox researcher Andrew Farnsworth and myself, followed by a live interview with Juan Pablo Bello.

In the segment we discuss our latest work on automatic bird species classification from flight call recordings captured with acoustic sensors for bioacoustics migration monitoring. You can listen to the BirdVox segment here.

0 Comments

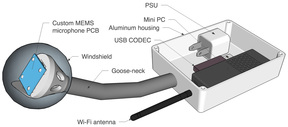

Today I'll be giving an invited talk at the Machine Learning for Music Discovery Workshop as part of the ICML 2016 conference. The talk is about Pitch Analysis for Active Music Discovery: A significant proportion of commercial music is comprised of pitched content: a melody, a bass line, a famous guitar solo, etc. Consequently, algorithms that are capable of extracting and understanding this type of pitched content open up numerous opportunities for active music discovery, ranging from query-by-humming to musical-feature-based exploration of Indian art music or recommendation based on singing style. In this talk I will describe some of my work on algorithms for pitch content analysis of music audio signals and their application to music discovery, the role of machine learning in these algorithms, and the challenge posed by the scarcity of labeled data and how we may address it. And here's the extended abstract: Pitch Analysis for Active Music Discovery J. Salamon Machine Learning for Music Discovery workshop, International Conference on Machine Learning (ICML), invited talk, New York City, NY, USA, June 2016. [PDF] The workshop has a great program lined up, if your'e attending ICML 2016 be sure to drop by!  click on image to enlarge click on image to enlarge The urban sound environment of New York City (NYC) can be, amongst other things: loud, intrusive, exciting and dynamic. As indicated by the large majority of noise complaints registered with the NYC 311 information/complaints line, the urban sound environment has a profound effect on the quality of life of the city’s inhabitants. To monitor and ultimately understand these sonic environments, a process of long-term acoustic measurement and analysis is required. The traditional method of environmental acoustic monitoring utilizes short term measurement periods using expensive equipment, setup and operated by experienced and costly personnel. In this paper a different approach is pro- posed to this application which implements a smart, low-cost, static, acoustic sensing device based around consumer hardware. These devices can be deployed in numerous and varied urban locations for long periods of time, allowing for the collection of longitudinal urban acoustic data. The varied environmental conditions of urban settings make for a challenge in gathering calibrated sound pressure level data for prospective stakeholders. This paper details the sensors’ design, development and potential future applications, with a focus on the calibration of the devices’ Microelectromechanical systems (MEMS) microphone in order to generate reliable decibel levels at the type/class 2 level. For further details see our paper: The Implementation of Low-cost Urban Acoustic Monitoring Devices C. Mydlarz, J. Salamon and J. P. Bello Applied Acoustics, special issue on Acoustics for Smart Cities, 2016. [Elsevier][PDF] This paper is part of the SONYC project. |

NEWSMachine listening research, code, data & hacks! Archives

March 2023

Categories

All

|