The results: The proposed model produces state-of-the results on flight call detection that are robust to environmental changes across time and space.

The surprise: Interestingly, we find that while context adaptation alone doesn't help significantly, and applying PCEN pre-processing doesn't help much either, applying both combined leads to dramatic gains.

The tech: We release BirdVoxDetect, an open-source tool for automatically detecting avian flight calls in continuous audio recordings: https://github.com/BirdVox/birdvoxdetect

Installing BirdVoxDetect (assuming Python is installed) is as easy as calling : pip install birdvoxdetect

Full paper:

Robust Sound Event Detection in Bioacoustic Sensor Networks

V. Lostanlen, J. Salamon, A. Farnsworth, S. Kelling, and J.P. Bello

PLoS ONE 14(10): e0214168, 2019. DOI: https://doi.org/10.1371/journal.pone.0214168

[PLoS ONE][PDF][BibTeX]

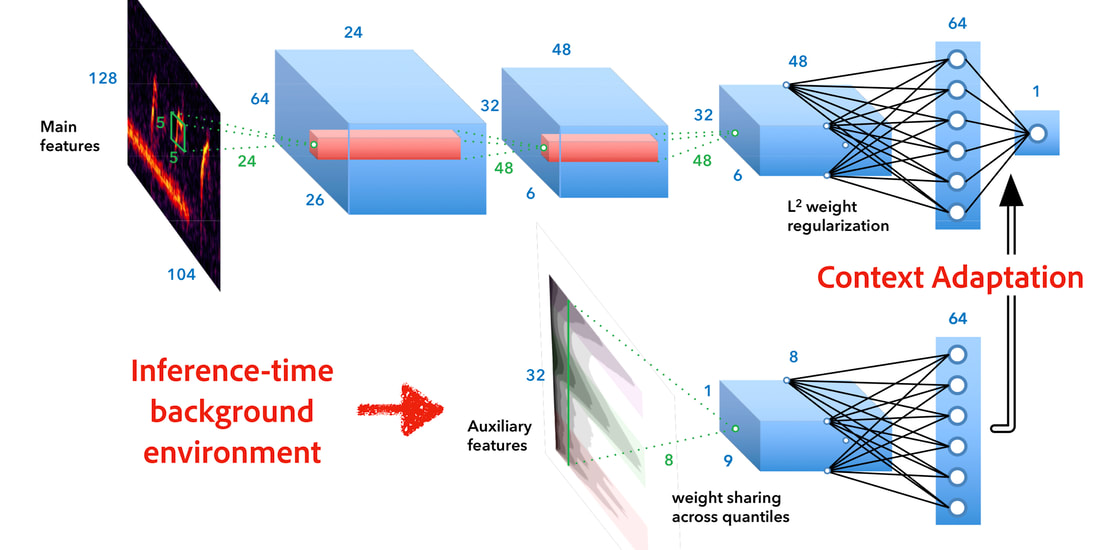

Model block diagram:

Bioacoustic sensors, sometimes known as autonomous recording units (ARUs), can record sounds of wildlife over long periods of time in scalable and minimally invasive ways. Deriving per-species abundance estimates from these sensors requires detection, classification, and quantification of animal vocalizations as individual acoustic events. Yet, variability in ambient noise, both over time and across sensors, hinders the reliability of current automated systems for sound event detection (SED), such as convolutional neural networks (CNN) in the time-frequency domain. In this article, we develop, benchmark, and combine several machine listening techniques to improve the generalizability of SED models across heterogeneous acoustic environments. As a case study, we consider the problem of detecting avian flight calls from a ten-hour recording of nocturnal bird migration, recorded by a network of six ARUs in the presence of heterogeneous background noise. Starting from a CNN yielding state-of the-art accuracy on this task, we introduce two noise adaptation techniques, respectively integrating short-term (60 ms) and long-term (30 min) context. First, we apply per-channel energy normalization (PCEN) in the time-frequency domain, which applies short-term automatic gain control to every subband in the mel-frequency spectrogram. Secondly, we replace the last dense layer in the network by a context-adaptive neural network (CA-NN) layer, i.e. an affine layer whose weights are dynamically adapted at prediction time by an auxiliary network taking long-term summary statistics of spectrotemporal features as input. We show that PCEN reduces temporal overfitting across dawn vs. dusk audio clips whereas context adaptation on PCEN-based summary statistics reduces spatial overfitting across sensor locations. Moreover, combining them yields state-of-the-art results that are unmatched by artificial data augmentation alone. We release a pre-trained version of our best performing system under the name of BirdVoxDetect, a ready-to-use detector of avian flight calls in field recordings.

RSS Feed

RSS Feed