So... should all machine learning for music be end-to-end? See what we found in the full paper:

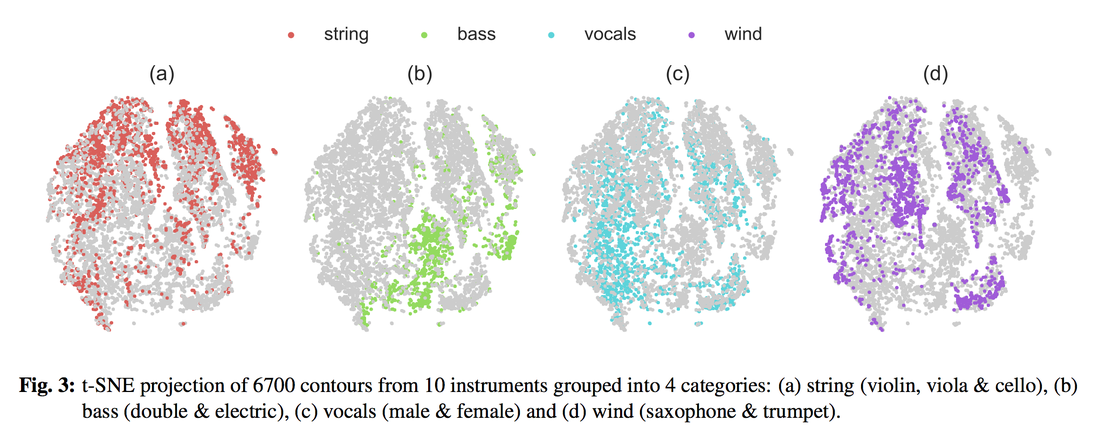

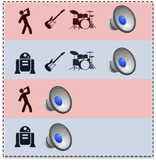

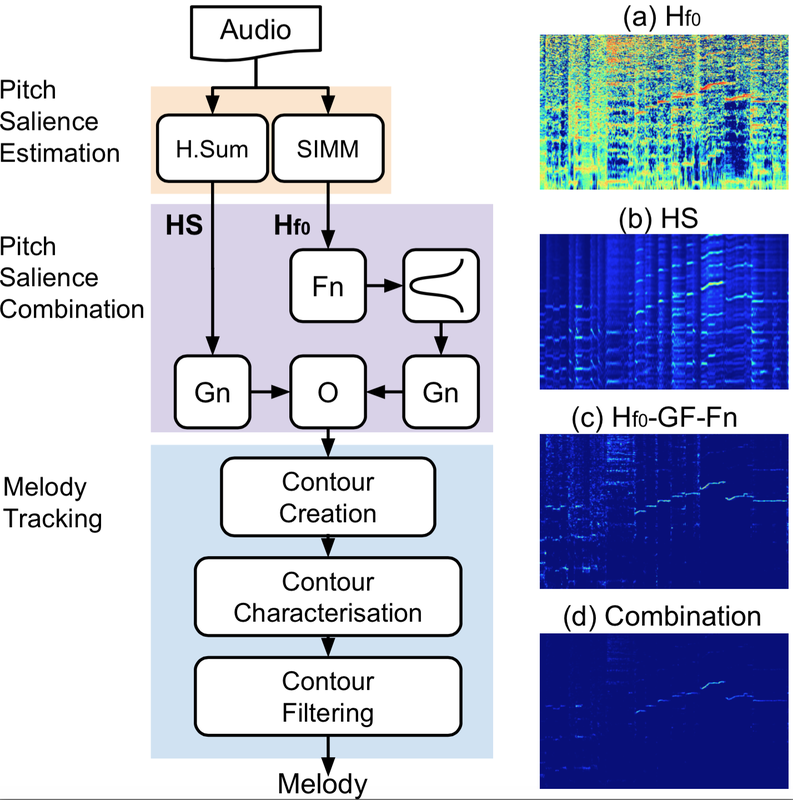

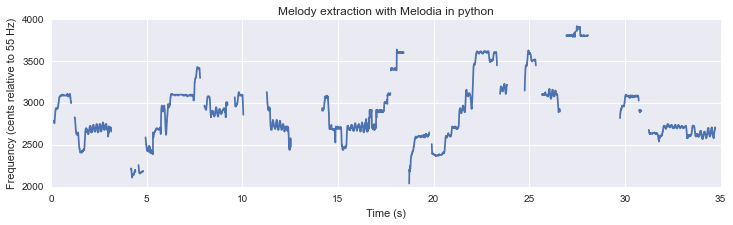

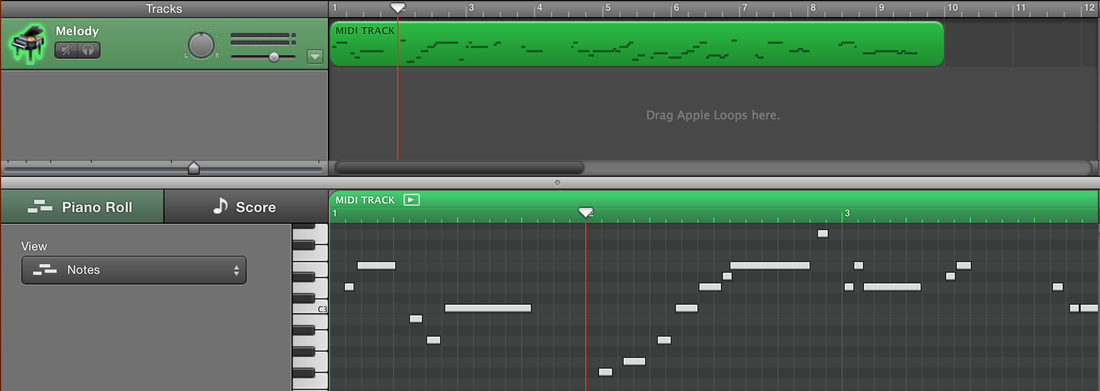

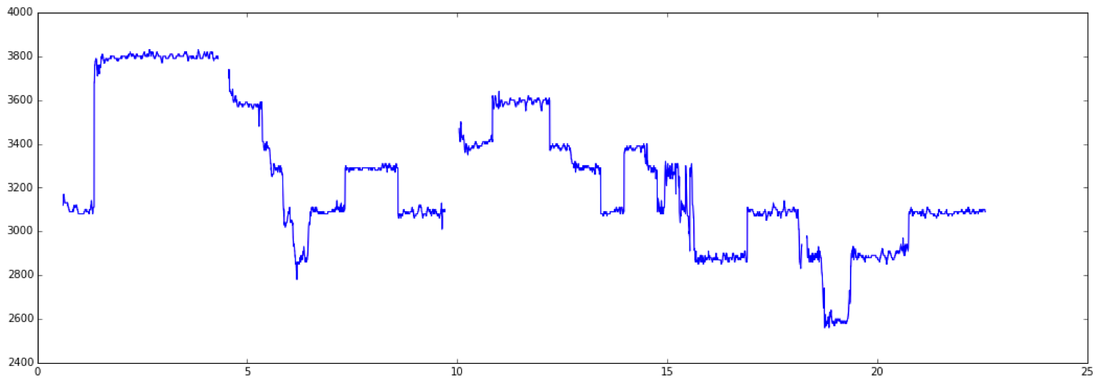

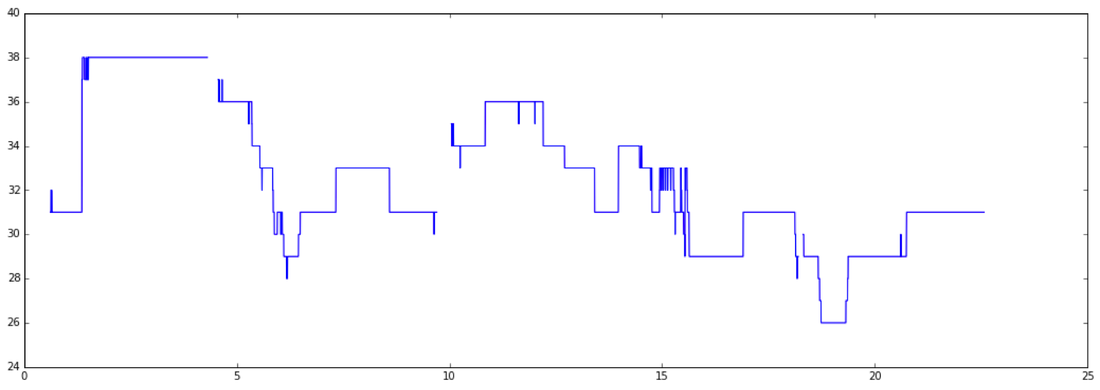

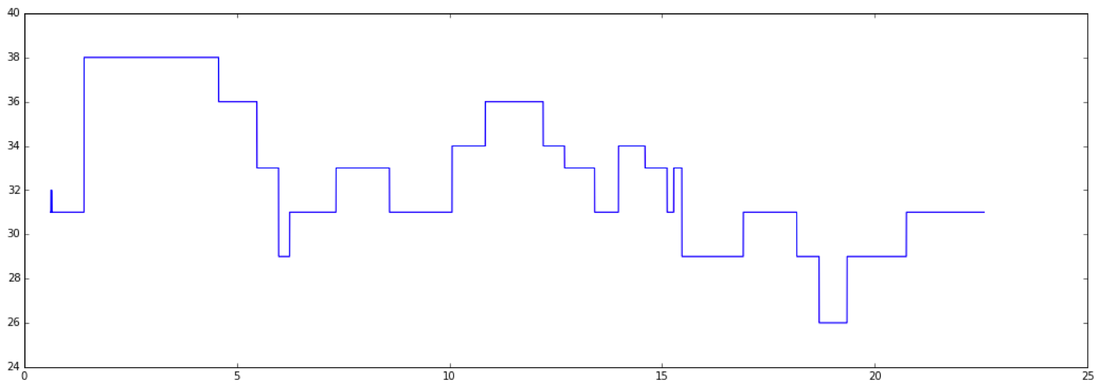

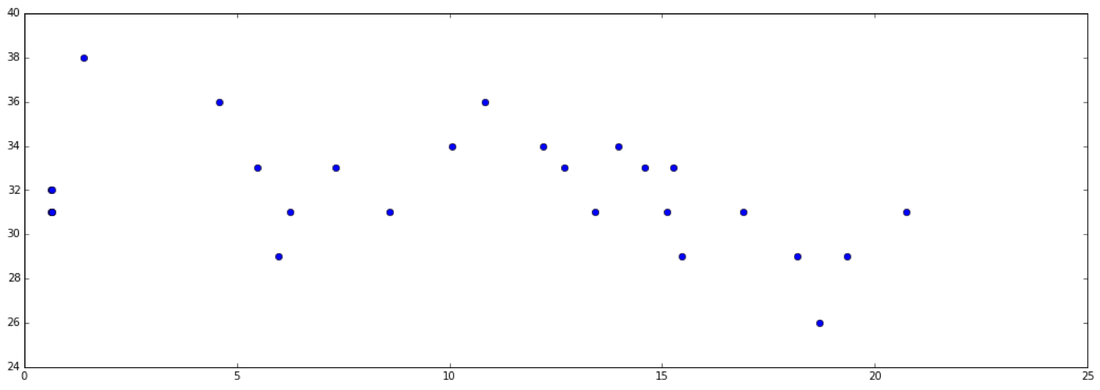

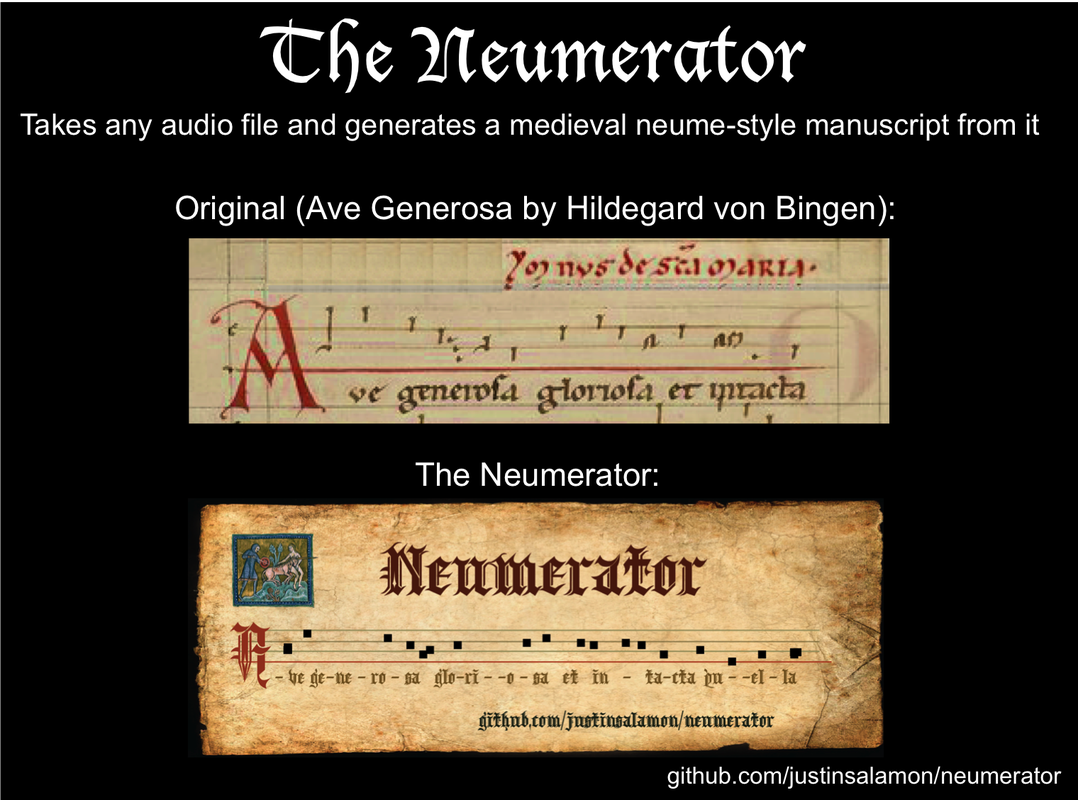

Pitch Contours as a Mid-Level Representation for Music Informatics

R. M. Bittner, J. Salamon, J. J. Bosch, and J. P. Bello.

In AES Conference on Semantic Audio, Erlangen, Germany, Jun. 2017.

[PDF]

RSS Feed

RSS Feed